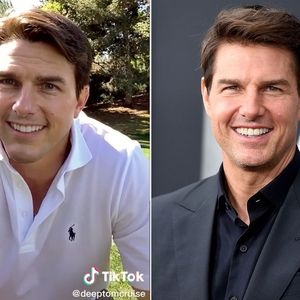

Deepfake Video of Taylor Swift Speaking Mandarin Ignites AI Ethics Debate in China

A deepfake video of pop icon Taylor Swift fluently speaking Mandarin has recently surfaced, sparking widespread discussion in China about the ethical...

Decentralized Exchange Sushi Partners with Ethics-First, Shariah-Compliant Haqq Network

Sushi.com, a major decentralized exchange, has entered into a transformative alliance with the Haqq Network, aiming to bring about a significant shift...

Pro-XRP Lawyer Says SEC Ethics Office Didn’t Approve Hinman’s Speech

Deaton said if the SEC Ethics office approved Hinman’s speech, we would have heard about it long ago. CryptoLaw founder attorney John Deaton has made...

South Korea to Amend Public Service Ethics Act, Mandating Disclosure of Cryptocurrency Holdings

The South Korean government has drafted an amendment to an existing law, which would require public officials to disclose their cryptocurrency holding...

Ethical SWOT Analysis of Cryptocurrencies: Understanding their Code of Ethics

As cryptocurrencies reshape our financial landscape, they bring with them a Pandora’s box of ethical concerns that warrant closer examination. Respons...

Latest Crypto News

Related News

-

The ethics of the metaverse: Privacy, ownership and contro...

As the metaverse continues to grow and evolve, it is essential that we consider...

Featured News

-

What Are The Ethics Of Sharing Scammers’ Real Names?

It is up to the Bitcoin community to self-police by any means necessary, even if...