Quantum Black Swan: How a 2026 Quantum-Computing Breakthrough Could Upend Crypto (and Which Coins Might Survive)

A simulated quantum stress test conducted using OpenAI’s ChatGPT o3 model has raised fresh concerns about the future of digital assets. The simulation...

Google’s Quantum Computing Could Threaten Bitcoin Encryption, Warns NYDIG

The post Google’s Quantum Computing Could Threaten Bitcoin Encryption, Warns NYDIG appeared first on Coinpedia Fintech News Every time quantum computi...

Is Bitcoin’s Security Threatened by Quantum Computing?

The post Is Bitcoin’s Security Threatened by Quantum Computing? appeared first on Coinpedia Fintech News Elliptic Curve Cryptography is the backbone o...

Aethir, GAIB, and GMI Cloud integrate H200 GPUs into decentralized computing platforms

The integration of H200 GPUs into decentralized platforms democratizes AI development, fostering innovation and new investment opportunities globally....

HyperCycle and Penguin Group Join Forces To Redefine High-Performance Computing for AI-Driven Growth in Paraguay

June 27, 2023 – Geneva, Switzerland HyperCycle, a groundbreaking layer zero++ blockchain infrastructure engineered for high-speed and cost-effective A...

Latest Crypto News

Related News

-

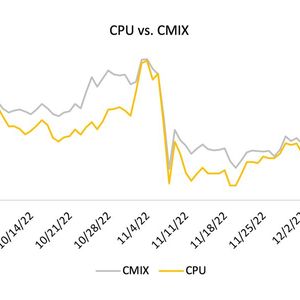

How Does Quantum Computing Impact Crypto Mining In a Disti...

In an era underscored by relentless technological evolution, our digital society...

Featured News

-

How does quantum computing impact the finance industry?

Quantum computing could revolutionize finance by solving complex problems quickl...